On Sept 16th, Foreign Policy magazine printed an article about the predictive powers of computes in foreshadowing revolutions around the world. The writer claims that in the not so distant future, technology and super computers will be able to predict pubic unrest and reveal incredible insights to the functioning of society. Drawing extensively from Klev Leetrau’s work on “Culturomics”, the article describes how by monitoring social networking sights, social media, and news, scientists can perform “sentiment mining” to draw conclusions about tone and polarity of text. Sentiment mining is essentially analyzing the polarity or neutrality of a given word or text and afterward more advanced into the emotional states of documents.

To me, all of this seems a bit surreal: A computer understanding tone and emotion from text in such a way that can predict future events? As skeptical as I was initially, what is stranger is that I have hard about this future of technology TWICE this week already, and this hypothesis does not seem to be fading away.

Over the weekend, I went to a lecture where Robert Kirkpartick from Global Pulse (a UN initiative) was discussing a future goal where a “platform will be developed as a global public good based on open design, free and open source and open standards.”

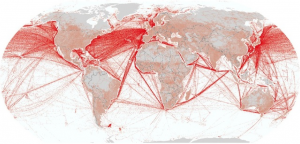

Their goal is to be able to convert all types of media data into measurable scales and determine the state of the nation on given topics.

The idea for this endeavor initially came from looking into past data that revealed spikes or dips in emotion towards a range of topics preceding events such as the financial crisis or the Egyptian uprisings. While acknowledging that there is a knowledge gap and that often hindsight is more encouraging than making predictions for the future; this technique of curbing a problem before it starts appears to be the way our world is moving.

financial crisis or the Egyptian uprisings. While acknowledging that there is a knowledge gap and that often hindsight is more encouraging than making predictions for the future; this technique of curbing a problem before it starts appears to be the way our world is moving.

As with any new breakthrough in predictive political discourse, the serious questions of legitimacy and reliability are raised. Personally, I have a number of questions regarding final goals and incentives of developing this project further.

To begin with, what incentives does the UN face in promoting this platform and spending millions of dollars on predictive technologies? I am curious as to why would not a single nation take on this task, instead of being spearheaded by the UN when they could simply adapt more reliable technologies once fully developed. While the UN is predominantly using funds from the UK and Sweden, they clearly have much more liquid capital to put into the project than the previous computer hackers who proposed the idea initially. However, I can imagine that with legalities surrounding privacy issues differing in every nation this project has had to adapt to many hurdles, despite access to all of the useless facebook and tweets floating in unprotected cyberspace.

Another hesitation I hold towards this project is that the UN claims the predominant goal is to better understand the poor and how to handle stress in developing countries. During these lectures I attended, the Millennium Development Goals were often cited in saying that this kind of technology will help better predict how these people are living. However, I find an inherent flaw in this argument because these Millennium villagers are not necessarily the ones who are using social media. While it would be true that reports and news articles are written about these projects, it does not appear that these people will truly be expressing their “sentiment” through personal tweeting and blogs. Therefore, this project is inherently self selective from the onset. I would therefore be much more willing to accept the hurdle of creating the technology to bridge the data-emotion gap before placing it with the guise of helping the very people who technology has long left behind.

However, I know that being of an age where technology and computers are slowly seeping into every aspect of our lives, I will have to reconcile with the fact that soon these computers will be able to read our emotions as well. While understandably they will have a difficult time with sarcasm and nuance, I suppose a sense of humor will soon be the only way to beat the system in the future. #smileherecomesAI

Katherine Peterson is a Program and Research Intern with the SISGI Group focused on theories of development, globalization, and political ramifications of development work.